PRESQUE ISLE, Maine — Generative Artificial Intelligence is a powerful new tool that has caused much debate about its use in school classrooms.

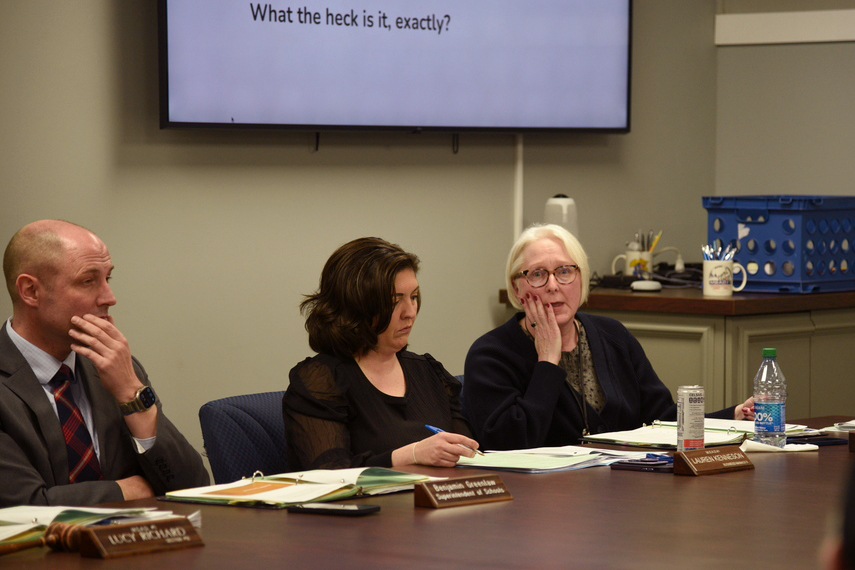

SAD1 Curriculum Director Jennifer Bourassa talked about AI’s potential uses and problems for educators during an SAD1 school board meeting on Monday, and ended with recommending teachers talk with ChatGPT to better understand how it works.

ChatGPT is an AI system that enables a user to have conversations with it and generate emails, essays, and other written material. It does this by mining material from data on the Internet. Plagiarism is harder to detect when using AI to write essays because the large language model on which it is based is drawing from millions of different documents online.

SAD1 is going to help high school students learn how to use generative AI in the classroom and the ethics of using AI like ChatGPT rather than outright ban its use and that of similar systems in classrooms.

“We want to know that kids are using AI effectively as well as honestly and they are doing their own work when we ask them to do their own work,” Bourassa said.

Bourassa went over how AI can write essays, grade essays, and come up with detailed lesson plans.

She doesn’t see how banning AI in the classroom is a reasonable response for schools because it is already being used in phones, and figuring out if something was written by AI is hard to detect even with programs made to detect AI text generation.

One example Bourassa talked about was when she tested the AI with a rubric and an old student’s graded paper with ChatGPT. The AI was able to grade the paper and give it well written feedback in five seconds.

Generative AI pulls information and makes patterns from the data, modeling the technology of human processes in the brain.

Students can use ChatGPT as a tutor to get feedback on papers to improve their writing, or teachers can generate and gamify a lesson plan. One problem Bourassa pointed out for educators is how they know a student is using ChatGPT to do work for them.

If a student knows they can’t write perfect essays they can give ChatGPT an input to create an imperfect paper that mimics how they would have written it, Bourassa said.

“[ChatGPT] is improving very rapidly and it is probably going to be what we think of as a disruptive technology,” Bourassa said.

She compared ChatGPT disruptive technology to the iPhone when it first came out back in 2007. Bourassa pointed out that other apps already use a version of AI technology like Google Maps, Youtube, Siri, and Netflix.

Teachers should ask their students to write papers by hand as one way of getting around ChatGPT in the classroom and see what the student’s writing actually looks like written down, Bourassa said.

“In some ways it’s like an automatic plagiarism, which is what some people call it,” Bourassa said.

An update to the SAD1 plagiarism policy will be needed since it’s grounded in the 1980s, she said.

Another problem is when AI hallucinates, or makes up information to a question it doesn’t know the answer, along with citations and resources that are false.

Educators at SAD1 need to better understand generative AI and its limitations so it can be taught to students, Bourassa said.

So far, SAD1’s high school English department is using prompts with ChatGPT with high school students to better understand where the AI can fall down, although a structured lesson plan is not in place.